Learning from High-Dimensional Data via Transformations

Dr. Hossein Mobahi, MIT

12:00pm Monday 29, February 2016, ITE325b

High-dimensional data is ubiquitous in the modern world, arising in images, movies, biomedical measurements, documents, and many other contexts. The “curse of dimensionality” tells us that learning in such regimes is generally intractable. However, practical problems often exhibit special simplifying structures which, when identified and exploited, can render learning in high dimensions tractable. This is a great prospect however, but how to get there in not trivial. In this talk, I will address two challenges associated with high-dimensional learning and discuss my proposed solution.

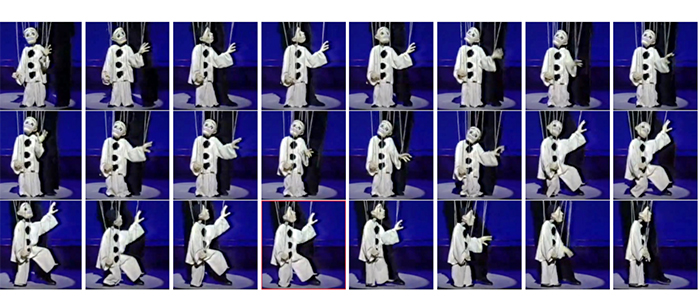

First, parsimony (sparsity, low rank, etc.) is one of the most prevalent structures in high-dimensional learning applications. However, its presence is often implicit and it reveals itself only after a transformation of the data. Studying the space of such transformations and the associated algorithms for their inference constitute an important class of problems in high-dimensional learning. I will present some of my work in this direction related to image segmentation. I will show how low-rank structures become abundant in images when certain spatial and geometric transformations are considered. This work resulted in a state of the art algorithm for segmentation of natural images.

Second, important scenarios such as deep learning involve high-dimensional nonconvex optimization. Such optimization is generally intractable. However, I show how some properties in the optimization landscape, such as smoothness and stability, can be exploited to transform the objective function to simpler subproblems and allow obtaining reasonable solutions efficiently. The theory is derived by combining the notion of convex envelopes with differential equations. This results in algorithms involving high-dimensional convolution with the Gaussian kernel, which I show has a closed form in many practical scenarios. I will present applications of this work in image alignment, image matching, and deep learning. Furthermore, I will discuss how this theory justifies heuristics currently used in deep learning and suggests new training algorithms that offer a significant speedup.

Hossein Mobahi is a postdoctoral researcher in the Computer Science and Artificial Intelligence Lab at the Massachusetts Institute of Technology. His research interests include machine learning, computer vision, optimization, and especially the intersection of the three. He obtained his PhD from the University of Illinois at Urbana-Champaign in Dec 2012. He is the recipient of Computational Science & Engineering Fellowship, Cognitive Science & AI Award, and Mavis Memorial Scholarship. His recent work on machine learning and optimization have been covered by the MIT news.