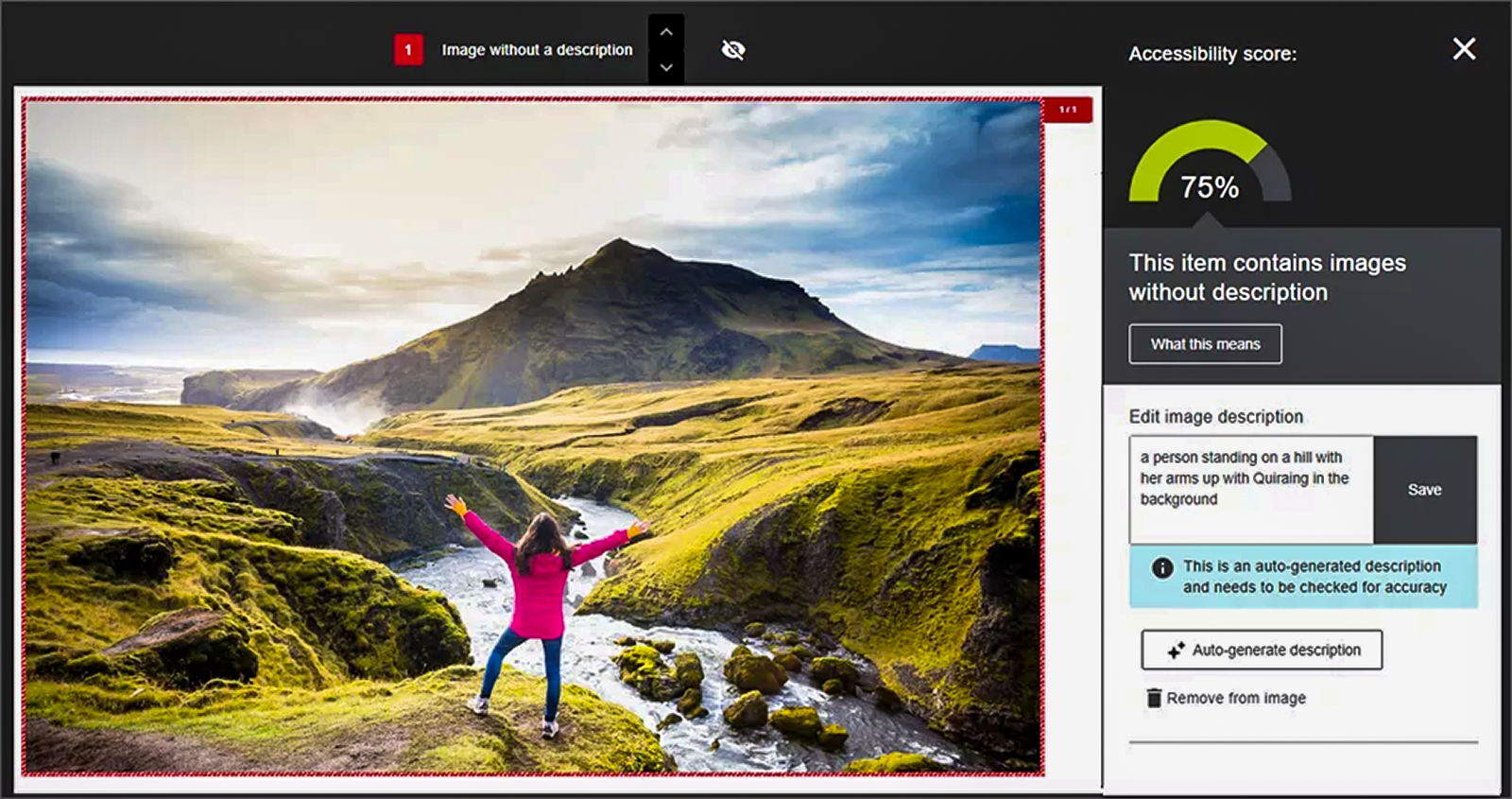

Example of Ally's AI generated image description.

Here's an interesting application of new GenAI systems: helping instructors make their course materials more accessible. A best practice for web accessibility is to ensure every non-decorative image has a good, descriptive alternative text (alt text) description to make content accessible to users with disabilities, particularly those who are blind or visually impaired.

The latest version of the Ally tool built into the Blackboard system used at UMBC will find images without alt text descriptions and offer to help by automatically generating descriptions.

Ally will flag images that lack a description in a Blackboard course. Instructors can enter a description themselves, mark the image as decorative, or leverage AI tools, powered by Microsoft Azure AI Vision, to scan the image and generate a suggested description. Instructors retain full control over the image and can edit the content to make corrections or clarify the information before saving it.

You can read more about this new Ally capability in this post in UMBC's Instructional Technology group.

UMBC Center for AI